First of all, Happy New Year to you all!

We have a great year ahead. And, let's start it with something interesting.

We've talked about how Convolutional Neural Networks (CNNs) are able to learn complex features from input procedurally through convolutional filters in each layer.

But, how does a convolutional filter really look like?

In today's post, let's try to visualize the convolutional filters of the LeNet model trained on the MNIST dataset (handwritten digit classification) - often considered the 'hello world' program of deep learning.

We can use a technique to visualize the filters from the article "How convolutional neural networks see the world" by François Chollet (the author of the Keras library). The original article is available at the Keras Blog: https://blog.keras.io/how-convolutional-neural-networks-see-the-world.html.

The original code is designed to work with the VGG16 model. Let’s modify it a bit to work with our LeNet model.

We need to load the LeNet model with its weights. You can follow the code here to train the model yourself and get the weights. Let's name the weights file as 'lenet_weights.hdf5'.

We'll start with the imports,

from scipy.misc import imsave import numpy as np import time from keras import backend as K from keras.models import Sequential from keras.layers.convolutional import Conv2D from keras.layers.convolutional import MaxPooling2D from keras.layers.core import Activation from keras.layers.core import Flatten from keras.layers.core import Dense from keras.optimizers import SGD

We need to build and load the LeNet model with the weights. So, we define a function - build_lenet - for it.

def build_lenet(width, height, depth, classes, weightsPath=None): # Initialize the model model = Sequential() # The first set of CONV => RELU => POOL layers model.add(Conv2D(20, (5, 5), padding="same", input_shape=(height, width, depth))) model.add(Activation("relu")) model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2))) # The second set of CONV => RELU => POOL layers model.add(Conv2D(50, (5, 5), padding="same")) model.add(Activation("relu")) model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2))) # The set of FC => RELU layers model.add(Flatten()) model.add(Dense(500)) model.add(Activation("relu")) # The softmax classifier model.add(Dense(classes)) model.add(Activation("softmax")) # If a weights path is supplied, then load the weights if weightsPath is not None: model.load_weights(weightsPath) # Return the constructed network architecture return model # build the LeNet network with pre-trained weights model = build_lenet(width=28, height=28, depth=1, classes=10, weightsPath="data/lenet_weights.hdf5")

We then print the summary of the model we loaded.

model.summary()

This would print out the following,

|

| The summary of the LeNet model |

From this summary, we can see that the two convolutional layers are named ‘conv2d_1’ and ‘conv2d_2’.

We now need to remove the fully-connected portion of the model. We use model.layers.pop() function to remove everything below the last MaxPooling layer.

# we remove the fully-connected layers from the model model.layers.pop() model.layers.pop() model.layers.pop() model.layers.pop() model.layers.pop()

We re-compile the model after popping the layers, and get a summary of it again.

# compile the model opt = SGD(lr=0.01) model.compile(loss="categorical_crossentropy", optimizer=opt, metrics=["accuracy"]) model.summary()

The summary of the stripped-down model looks like this,

|

| The summary of the stripped-down model |

We define the normalize function – which normalizes the tensor by its L2-norm to allow a smooth gradient ascent – and the deprocess_image function – which transforms a tensor into a valid image – as suggested by the original article.

# util function to convert a tensor into a valid image def deprocess_image(x): # normalize tensor: center on 0., ensure std is 0.1 x -= x.mean() x /= (x.std() + 1e-5) x *= 0.1 # clip to [0, 1] x += 0.5 x = np.clip(x, 0, 1) # convert to RGB array x *= 255 if K.image_data_format() == 'channels_first': x = x.transpose((1, 2, 0)) x = np.clip(x, 0, 255).astype('uint8') return x # utility function to normalize a tensor by its L2 norm def normalize(x): return x / (K.sqrt(K.mean(K.square(x))) + 1e-5)

We then define the parameters to be used for visualizing. We set the width and height as 28 as it is the input size we used to train the LeNet model. We select the ‘conv2d_2’ as the layer we’re going to visualize (we know the names of the layers from the model summary). We create a dictionary – layer_dict – which has layer name -> layer structure. The kept_filters is the placeholder for holding processed filters from the main loop.

# dimensions of the generated pictures for each filter. img_width = 28 img_height = 28 # the name of the layer we want to visualize layer_name = 'conv2d_2' # this is the placeholder for the input images input_img = model.input # get the symbolic outputs of each "key" layer. layer_dict = dict([(layer.name, layer) for layer in model.layers[1:]]) kept_filters = []

Next, we come to the main chunk of the code.

We loop over the 50 filters of the conv2d_2 layer, get the loss and gradients of each, and normalize the gradients (using the normalize function defined above). We then start with a grey image with random noise, and run gradient ascent for 20 steps. Finally, the processed filters are converted to images (using the deprocess_image function defined above) and added to the kept_filters list.

for filter_index in range(0, 50): # we scan through the first 50 filters print('Processing filter %d' % filter_index) start_time = time.time() # we build a loss function that maximizes the activation # of the nth filter of the layer considered layer_output = layer_dict[layer_name].output loss = K.mean(layer_output[:, :, :, filter_index]) # we compute the gradient of the input picture wrt this loss grads = K.gradients(loss, input_img)[0] # normalization trick: we normalize the gradient by its L2 norm grads = normalize(grads) # this function returns the loss and grads given the input picture iterate = K.function([input_img], [loss, grads]) # step size for gradient ascent step = 1. # we start from a gray image with some random noise input_img_data = np.random.random((1, img_width, img_height, 1)) input_img_data = (input_img_data - 0.5) * 20 + 128 # we run gradient ascent for 20 steps for i in range(20): loss_value, grads_value = iterate([input_img_data]) input_img_data += grads_value * step print('Current loss value:', loss_value) if loss_value <= 0.: # some filters get stuck to 0, we can skip them break # decode the resulting input image if loss_value > 0: img = deprocess_image(input_img_data[0]) kept_filters.append((img, loss_value)) end_time = time.time() print('Filter %d processed in %ds' % (filter_index, end_time - start_time))

With the images of the filters ready, we just need to stitch them together, and save the resulting image.

# we will stich the best 36 filters on a 6 x 6 grid. n = 6 # the filters that have the highest loss are assumed to be better-looking. # we will only keep the top 36 filters. kept_filters.sort(key=lambda x: x[1], reverse=True) kept_filters = kept_filters[:n * n] # build a black picture with enough space for # our 6 x 6 filters of size 28 x 28, with a 5px margin in between margin = 5 width = n * img_width + (n - 1) * margin height = n * img_height + (n - 1) * margin stitched_filters = np.zeros((width, height, 3)) # fill the picture with our saved filters for i in range(n): for j in range(n): img, loss = kept_filters[i * n + j] stitched_filters[(img_width + margin) * i: (img_width + margin) * i + img_width, (img_height + margin) * j: (img_height + margin) * j + img_height, :] = img # save the result to disk imsave('lenet_filters_%dx%d.png' % (n, n), stitched_filters)

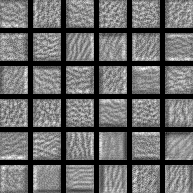

The stitched set of filters looks like this,

|

| The visualized convolutional filters of the LeNet model |

Looking at how these filters look, we can get a sense of how they work. They are trying to match to lines, edges, and textures in the input images in various directions. When an image is presented to this trained model, it's matched against these features - lines, edges, and textures - and a combination of those matched features are used to determine what the image is.

Related links:

To learn more on interesting facts on Deep Learning, check out my book,

Build Deeper: The Path to Deep Learning

Learn the bleeding edge of AI in the most practical way: By getting hands-on with Python, TensorFlow, Keras, and OpenCV. Go a little deeper...

Get your copy now!

No comments:

Post a Comment