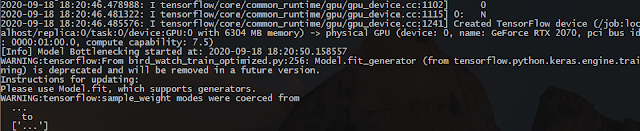

If you have been using data generators in Keras, such as ImageDataGenerator for augment and load the input data, then you would be familiar with the using the *_generator() methods (fit_generator(), evaluate_generator(), etc.) to pass the generators when trainning the model.

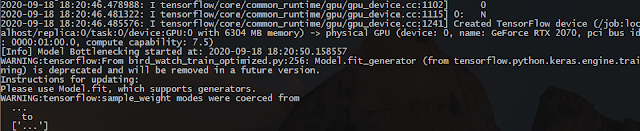

But recently, if you have switched to TensorFlow 2.1 or later (and tf.keras), you might have been getting a warning message such as,

Model.fit_generator (from tensorflow.python.keras.engine.training) is deprecated and will be removed in a future version.

Instructions for updating:

Please use Model.fit, which supports generators.

Or,

Model.evaluate_generator (from tensorflow.python.keras.engine.training) is deprecated and will be removed in a future version.

Instructions for updating:

Please use Model.evaluate, which supports generators.

|

| fit_generator() Deprecation Warning |

This is because in tf.keras, as well as the latest version of multi-backend Keras, the model.fit() function can take generators as well.