"We may be born a little too late to explore earth, and born too early to explore the universe. Yet, we may be here just in time to witness the rise of AI.

And we can help build that future." - Deep Learning On Windows, Thimira Amaratunga

You might be from a traditional AI and Machine Learning background, or just starting to learn about AI. You could have been in the field of AI for many years, or just now getting your feet wet. In any case, what you might find confusing at the initial stage are the multitude of terms such as ‘Artificial Intelligence’, ‘Machine Learning’, and ‘Deep Learning’.

In recent years, the term ‘Deep Learning’ has become a buzzword. So much so that it is now associated with some consumer technologies. Tech giants like Google, Apple, Amazon, Microsoft, IBM, and many others are actively engaged in AI innovation, while organizations specialized in AI such as DeepMind and OpenAI have emerged in the past few years.

Now, you might be wondering what each of these terms – Artificial Intelligence, Machine Learning, Deep Learning – mean. You might even be trying to figure out how they relate to each other, or whether they can be used interchangeably, and most importantly, where each of them come from.

Now, you might be wondering what each of these terms – Artificial Intelligence, Machine Learning, Deep Learning – mean. You might even be trying to figure out how they relate to each other, or whether they can be used interchangeably, and most importantly, where each of them come from.

These are common questions when we first start getting into Deep Learning.

|

| The confusion of Deep Learning |

So, after much research, here’s a simplified explanation on how the terms Artificial Intelligence, Machine Learning, and Deep Learning came to be, and how they relate to each other.

Artificial Intelligence

Artificial Intelligence is the idea that machines (or computers) can be built that has intelligence parallel (or greater) to that of a human, giving them capability to perform tasks that requires human intelligence to perform.

The idea of an intelligent machine has been around since 1300's, and through 19th century - where mathematicians like George Boole and Gottlob Frege refined the concepts of Propositional Logic and Predicate Calculus. But the Dartmouth Conferences in 1956 is what’s commonly considered as the starting point of the formal research field of Artificial Intelligence. Since then the field of AI has gone through many ups-and-downs and has branched out into many sub fields. There has been attempts at applying AI for various fields – such as medical, finance, aviation, machinery etc. – with various degrees of success.

Around the late 1990s and early 2000s, the researchers identified a problem in their approach to AI, which was slowing down the success of AI – in order to artificially create a machine with an intelligence, one must first need to understand how intelligence work. But even today, we do not have a complete definition of what we call "intelligence".

In order to tackle the problem, they decided to go ground-up – rather than trying to build an intelligence, they could look into building a system that can grow its own intelligence. This idea created the new sub-field of AI called Machine Learning.

Machine Learning

Machine Learning is a subset of Artificial Intelligence which aims at providing machines with the ability to learn without explicitly programming. The idea is that such machines (or computer programs) once built will be able to evolve and adapt when they are exposed to new data.

The main idea behind Machine Learning is the ability of a learner to generalize from its experience. The learner (or the program), once given a set of training cases, must be able to build a generalized model upon them, which would allow it to decide upon new cases with sufficient accuracy.

Based on the approach, there are 3 learning methods of Machine Learning systems,

- Supervised Learning – the system is given a set of labelled cases (training set) and asked to create a generalized model on those to act on unseen cases.

- Unsupervised Learning – the system is given a set of cases unlabelled, and asked to find a pattern in them. Good for discovering hidden patterns.

- Reinforcement Learning – the system is asked to take an action, and is given a reward. The system must learn which actions would yield most rewards in certain situations.

With these techniques, the field of machine learning flourished. They were particularly successful in the areas of Computer Vision and Text Analysis.

By around 2010, few things happened that influenced machine learning further. With the ever advancing technology, more computing power became available, making evaluating more complex machine learning models easier. Data processing and storage became cheaper, so more data became available to machine learning systems to consume. Parallel to these, our understanding of how the natural brain works also increased, allowing us to model new machine learning algorithms around them.

All these findings propelled a new area of Machine Learning called Deep Learning.

Deep Learning

Deep Learning is a subset of Machine Learning which focuses on an area of algorithms which was inspired by our understanding of how the brain works in order to obtain knowledge. It’s also referred to as Deep Structured Learning or Hierarchical Learning.

One of the definitions of Deep Learning is,

"A sub-field within machine learning that is based on algorithms for learning multiple levels of representation in order to model complex relationships among data. Higher-level features and concepts are thus defined in terms of lower-level ones, and such a hierarchy of features is called a deep architecture" - Deep Learning: Methods and ApplicationsDeep Learning builds upon the idea of Artificial Neural Networks and scales it up to be able to consume large amounts of data by deepening (adding more layers) the networks. By having a large number of layers a deep learning model has the capability of extracting features from raw data and "learn" about those features little-by-little in each layer, building up to the higher-level knowledge of the data. This technique is called Hierarchical Feature Learning, and it allows such systems to automatically learn complex features through multiple levels of abstraction with minimal human intervention.

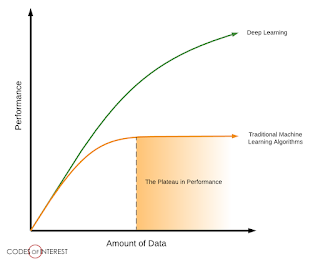

"The hierarchy of concepts allows the computer to learn complicated concepts by building them out of simpler ones. If we draw a graph showing how these concepts are built on top of each other, the graph is deep, with many layers. For this reason, we call this approach to AI deep learning." - Deep Learning. MIT Press, Ian Goodfellow and Yoshua Bengio and Aaron Courville.One of the most distinct characteristics of Deep Learning – and one that made it quite popular and practical – is that it scales well, that is, the more data given to it, the better it performs. Unlike many older machine learning algorithms which has a higher bound to the amount of data they can ingest – often called a "plateau in performance" – Deep Learning models has no such limitations (theoretically) and they may be able to go beyond human comprehension, which is evident with the modern deep learning based image processing systems being able to outperform humans.

|

| The Plateau in Performance in Traditional vs. Deep Learning |

Convolutional Neural Networks

Convolutional Neural Networks are a prime example for Deep Learning. They were inspired by how the neurons are arranged in the visual cortex (the area of the brain which processes visual input). Here, not all neurons are connected to all of the inputs from the visual field. Instead, the visual field is ‘tiled’ with groups of neurons (called Receptive fields) which partially overlap each other.

Convolutional Neural Networks (CNNs) work in a similar way. They process in overlapping blocks of the input using mathematical convolution operators (which approximates how a receptive field works).

|

| A Convolutional Neural Network |

The first convolution layer users a set of convolution filters to identify a set of low level features from the input image. These identified low level features are then pooled (from the pooling layers) and given as the input to the next convolution layer, which uses another set of convolution filters to identify a set of higher level features from the lower level features identified earlier. This continues for several layers, where each convolution layer uses the inputs from the previous layer to identify higher level features than the previous layer. Finally, the output of the last convolution layer is passed on to a set of fully connected layers for the final classification.

How Deep?

With the capabilities of Deep Learning grasped, there’s one question that usually comes up when one first learns about Deep Learning:

If we say that deeper and more complex models gives Deep Learning models the capabilities to surpass even human capabilities, then how deep a machine learning model should be to be considered a Deep Learning model?

It turns out, we were asking the wrong question. We need to look at Deep Learning from a different angle to understand it.

Let’s take a step back and see how a Deep Learning model works. Let's take the Convolutional Neural Networks as the example again.

As mentioned above, the convolution filters of a CNN attempts to identify lower-level features first, and use those identified features to identify higher-level features gradually through multiple steps.

This is the Hierarchical Feature Learning we talked about earlier, and it is the key of Deep Learning, and what differentiates it from traditional Machine learning algorithms.

|

| Hierarchical Feature Learning |

A Deep Learning model (such as a Convolutional Neural Network) does not try to understand the entire problem at once. I.e. it does not try to grasp all the features of the input at once, as traditional algorithms did. What it does is look at the input piece by piece, and derive lower level patterns/features from it. It then uses these lower level features to gradually identify higher level features, through many layers, hierarchically. This allows Deep Learning models to learn complicated patterns, by gradually building them up from simpler ones. This also allows Deep Learning models to comprehend the world better, and they not only ‘see’ the features, but also see the hierarchy of how those features are built upon.

And of course, having to learn features hierarchically means that the model must have many layers in it. Which means that such a model will be ‘deep’.

That brings us back to our original question: It is not that we call deep models as Deep Learning. It is that in order to achieve hierarchical learning the models need to be deep. The deepness is a byproduct of implementing Hierarchical Feature Learning.

So, how do we identify whether a model is a Deep Learning model or now?

Simply, if the model uses Hierarchical Feature Learning – identifying lower level features first, and then build upon them to identify higher level features - (e.g. by using convolution filters) then it is a Deep Learning model. If not, then no matter how many layers your model has (e.g. a neural network with only fully connected layers) then it’s not considered a Deep Learning model.

Putting it all together

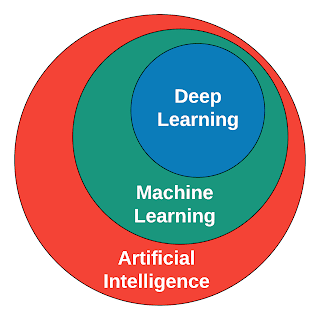

Getting back to our original question: How does the areas of Artificial Intelligence, Machine Learning and Deep Learning relate to each other?

|

| How Artificial Intelligence, Machine Learning, and Deep Learning relates to each other |

Simply put, Machine Learning is a subset of Artificial Intelligence, and Deep Learning is a subset of Machine Learning, all working towards the common goal of creating an intelligent machine.

|

| The evolution of Artificial Intelligence, Machine Learning, and Deep Learning |

With the capabilities demonstrated and the success achieved by Deep Learning, we may be a step closer to the ultimate goal of Artificial Intelligence – building a machine with a human level (or greater) intelligence.

To learn more about Deep Learning in detail, and learn to build your own deep learning models, check out my book: Build Deeper: The Path to Deep Learning.

Build Deeper: The Path to Deep Learning

Learn the bleeding edge of AI in the most practical way: By getting hands-on with Python, TensorFlow, Keras, and OpenCV. Go a little deeper...

Get your copy now!

Now that you have got an idea on what Deep Learning is, head over to the Codes of Interest Blog to get hands on with Deep Learning.

Related links:

Great de-mystifier into Deep Learning, Thimira!

ReplyDeleteIt'd have taken me days of reading to extract out the essence you have so succinctly put into words.

Thanks indeed!

Thank you!

DeleteI'm glad this helped :)

Thank you for the article. I work in the field of GIS and it almost speaks to that in the sense than my map contains many layers of information.

ReplyDeleteHave a nice weekend,

Chris